It Doesn't Do What I Want!

Why Traditional Computing Expectations Fail with Artificial Intelligence

The more I engage with educators and professionals about AI integration, the more I encounter a telling complaint: “Whenever I try to use AI, it doesn’t do what I want it to do.” This frustration reveals something deeper than technical difficulty. It exposes a fundamental misunderstanding of how AI transforms our relationship with computing. Because rather than simply adding another tool to our digital toolkit, artificial intelligence introduces a radical paradigm shift that requires us to reconsider our basic assumptions about human-computer interaction.

Why Probabilistic Systems Behave Differently

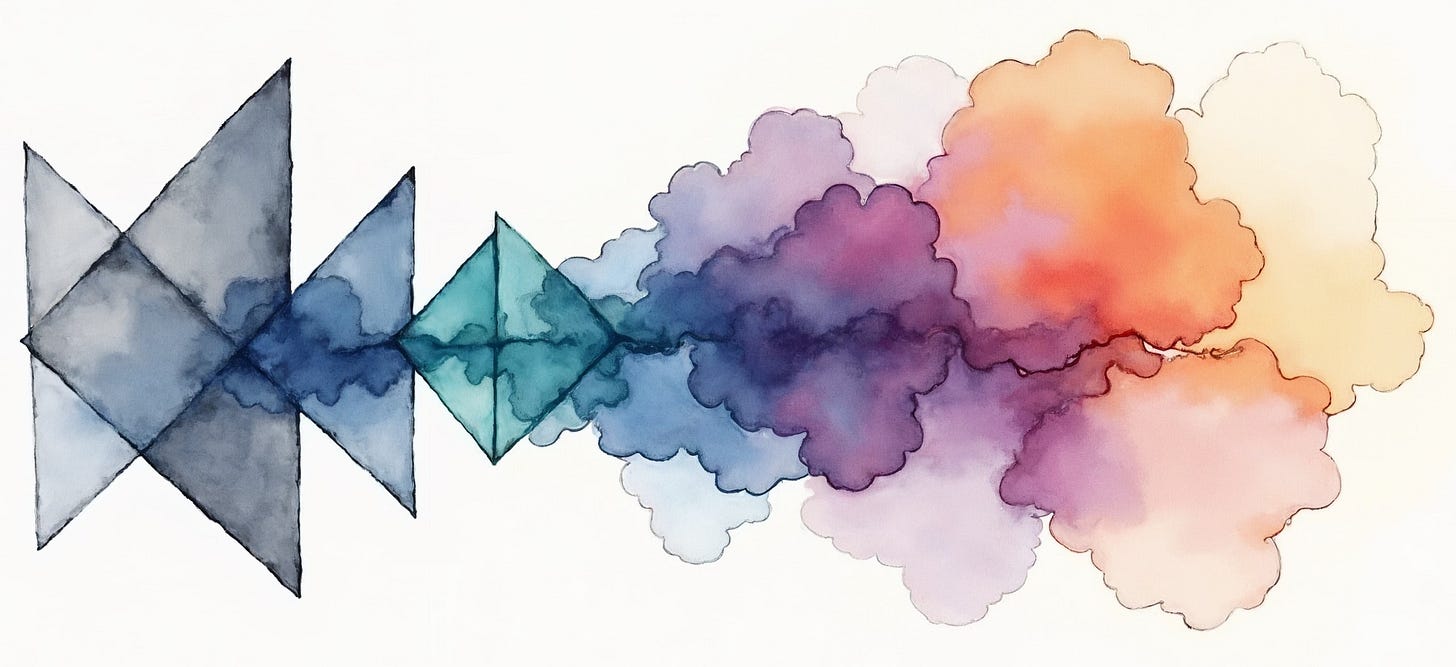

The root of this misunderstanding lies in the contrast between two substantially different computing approaches. Traditional software operates primarily through deterministic algorithms: input a formula, receive a predictable result; apply a filter, see a consistent transformation. And while modern software increasingly incorporates probabilistic elements (spell-checkers suggest corrections, search engines rank results), these features are typically built around a fundamentally deterministic core.

By contrast, generative AI amplifies the probabilistic paradigm to an unprecedented degree. These systems don’t execute deterministic processes, but instead generate outputs by analyzing statistical patterns derived from their training data. This means AI never guarantees an exact outcome; instead, it offers what it assumes to be the most probable response given the provided context. Understanding this distinction is crucial for anyone seeking to work effectively with these tools.

From Commands to Conversation

This probability-driven architecture transforms AI from a traditional tool into something resembling a collaborative partner. When we interact with AI, we initiate an exchange that unfolds through progressive refinement rather than single-shot execution. The system’s first response can only ever serve as a draft, a starting point for dialogue rather than a finished product.

Consider a marketing designer creating a campaign banner. With conventional software, she manipulates pixels directly through precise commands. With AI, she might begin with a prompt describing her vision, receive an initial generation, then guide the system through several rounds of adjustment. She refines the color palette here, adjusts the composition there, perhaps regenerating specific elements while preserving others. After three or four iterations, she achieves a result that meets brand guidelines in half the usual time. The process resembles a conversation more than command execution.

This shift demands new skills: recognizing promising directions in initial outputs, identifying elements worth preserving, and articulating modifications that guide the system toward the intended outcome. Success depends less on memorizing commands and more on developing what we might call “conversational competence” with AI systems.

The Creative Professional’s Adaptation

For those in creative fields, this transformation carries particular weight. Many designers, writers, and artists initially report feeling that AI threatens their expertise, their ability to translate vision into reality. Yet, this reaction often stems from approaching AI with deterministic expectations. When creators embrace the technology’s collaborative nature, they discover it can accelerate exploration of creative possibilities, suggest unexpected directions, and enable rapid prototyping of ideas.

The key lies in reframing expertise. Rather than defining professional skill as the ability to execute a predetermined vision, we might understand it as the capacity to guide an iterative process toward innovative outcomes. This aligns with how creative work actually unfolds (through drafts, revisions, and experimentation) but now with an active artificial participant in that process.

Broader Implications for Digital Literacy

These lessons from creative workflows illuminate a broader transformation in professional computing. Just as designers must learn to guide AI through successive refinements, professionals across disciplines need to develop similar capabilities. This represents a significant shift in what we mean by digital literacy.

Traditional computer education emphasizes command mastery: learning specific features, memorizing shortcuts, following prescribed workflows. AI literacy requires different competencies: understanding statistical outputs, developing strategies for incremental improvement, and cultivating judgment about when an AI-generated result serves its purpose. Educational programs must evolve accordingly, teaching not just how to prompt AI systems but how to think probabilistically about their outputs and engage in productive iteration.

Additionally, modern AI tools offer some control over randomness through parameters like temperature settings and seed values, allowing users to balance creativity with consistency. Understanding these controls becomes part of the new literacy, helping professionals navigate between exploration and convergence as their projects demand.

Turning Frustration into Fluency

The complaint “AI doesn’t do what I want” marks a transitional moment in our technological evolution. It reflects the discomfort of applying old mental models to new systems. As Donald Schön observed in The Reflective Practitioner, expertise often involves a conversation with the situation, and AI makes that conversation literal.

Those who thrive in this unfamiliar landscape will embrace the shift from deterministic to probabilistic thinking. They’ll develop what we might call an iterative mindset, viewing each AI interaction as part of a design process rather than a failed command. They’ll recognize that the technology’s apparent limitation (its inability to read our minds and deliver perfect results immediately) actually opens new possibilities for discovery and innovation.

This transformation extends beyond individual practice to reshape entire fields. As more professionals develop fluency with probability-driven systems, we’ll see alternative forms of human-AI and more general human-computer collaboration emerge. The question isn’t whether AI can replace human creativity or judgment, but how we can orchestrate productive partnerships between human intention and machine capability.

By understanding AI’s fundamental nature, not as a disappointing command executor but as a probabilistic collaborator, we position ourselves to harness its genuine potential. The future belongs to those who can navigate this new paradigm, guiding AI through thoughtful iteration toward outcomes that may surprise and exceed our initial visions. In embracing this shift, we take part in redefining what it means to work with computers in the twenty-first century.